This is a repost of an essay I wrote in 2017, which was later published in the Creativity Post. There are many more essays, talks, and links in library.in-process.net/everything-will-happen.

Trying to compute AGI (artificial general Intelligence) is not new.

How far back depends on whom you ask, for this write-up, I will focus on Minsky, McCarthy, and MIT’s AI Lab as the starting point (but we could easily go back to Turing and beyond).

When Minsky and McCarthy started the lab in 1959, they were very much set on computing general intelligence.

Machines that think, paired with consciousness, and capable of learning.

“There are 2 ways of looking at computing artificial intelligence, you can look at it from the point of view of biology or point of view of computer science You could imitative the nervous system as far as you understand the nervous system or you can immediate human psychology as far as you understand human psychology”

To annotate: “looking at it from the point of view of biology…” means the kind of “wet” programming the brain does, neurons, axons, and so forth. Simulating such process is likely to be a reference to neural networks, which are (broadly speaking) sets of networks modeled around the way the brain works: namely, taking a stab at clustering logic in proximity and generating hierarchies of information. “Looking at it from the point of psychology” is equally as provoking, as it refers to human intelligence as buckets of knowledge.

To push that slightly further before annotating, here is a quote by Marvin Minsky, part of a monologue in Machine Dreams.

“In order (for a machine) to be intelligent we have to give it several different kinds of thinking, when it switches from one of those to another we will say that it is changing emotions” “Emotion (in itself) is not a very profound thing, it’s just a switch between different modes of operation”

This is the other way of computing a brain. Clusters of knowledge and sets of actions are compartmentalized in different vertical buckets and switched by emotions.

Beyond being offensive to humanity, this argument is also straightforward to debunk using a quick thought experiment.

Imagine an intellectual person sitting in a chair, doing nothing at all, staring into thin air. That person is conscious and intelligent. Her lack of action does nothing to rob her of the consciousness and intelligent title.

In other words, intelligence is not conditioned by action. It need not be modeled around goals, nor operational switches.

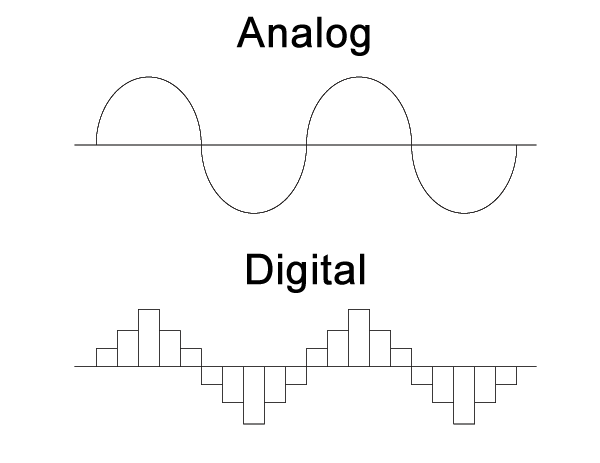

This is a critical point to stay on. This idea that we can compute human intelligence (we can’t), or that the brain is a computer (it’s not), is the underpinning belief that has been fueling generations of researchers, keen and persistent in their pursuit to compute a brain. Allan Watts refers to life as an ongoing oscillation—a continuous frequency of the brain.

You can imagine your intelligence (or soul, or consciousness) as a vibrating frequency. Like the trajectory of an analog sound wave as it travels through space. It is continuous, and unlike a digital factor, it is not broken into a set of high-fidelity instances.

A digital sound wave — say an MP3 file — is economical in storage because it can slice the edges of the frequency range, and then create a high-res sound–alike composition.

Let’s say that I could somehow peer into your brain and start creating a map of your intelligence. It may take me up to a few decades, but eventually, I will succeed in computationally solving your intelligence.

The problem is that I only solved the computation of your brain, and only at one point in time.

In other words, I only solved one instance of intelligence.

Anchored to a point in time and a subject. The brain, as is consciousness and intelligence, is an ongoing analog frequency. Not instance-based digital permutations.

For a much broader palette of opinions on the prospect of AGI (artificial general intelligence), I highly recommend What To Think of Machines That Think, by John Brockman.

If you’re still reading, it is safe for me to assume that you’re on board with my pro–human view. That is the view that the brain is not a computer problem, and hence we should abort the idea of AGI. Narrow AI, on the other hand, is alive and strong.

Once we accept the premise that AGI is of no use, we can start identifying the opportunities in narrow AI. Think of your calculator as a narrow expert in calculation, think of a medical journal scanner as the best tool we have to consume terabytes of medical journals, and machine-vision algorithms as the best face recognizer.

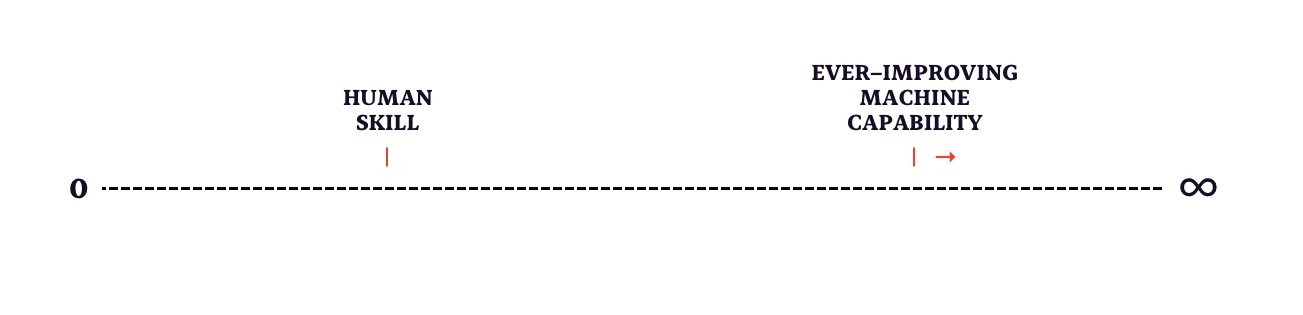

We can do the same tasks the machine is doing: calculate on a piece of paper, skim journals, and classify images, but only to a certain extent. Let’s draw this.

Intelligence on a narrow plain

On the left, there is ‘0’, no intelligence is being used, and nothing is being done. We can learn to perform a task, and as a next step, we might relay this knowledge to a machine so that it could hyper-mathematize it.

We can foresee improvements in computing power, access to data, and other technologies pushing the machine’s ability into infinity and the unknown. ON A NARROW DOMAIN. A singular plain. Life, as a system, contains many of these trajectories: flying, writing, internet browsing, coding, dancing, and driving. We learn new tasks, and some of them can graduate to automation.

New domains are added, while others become obsolete. For example, a self–driving car technician, or a horseshoe maker, respectively. We can imagine the system of life — i.e., humanities — as made of an infinite amount of these single line trajectories.

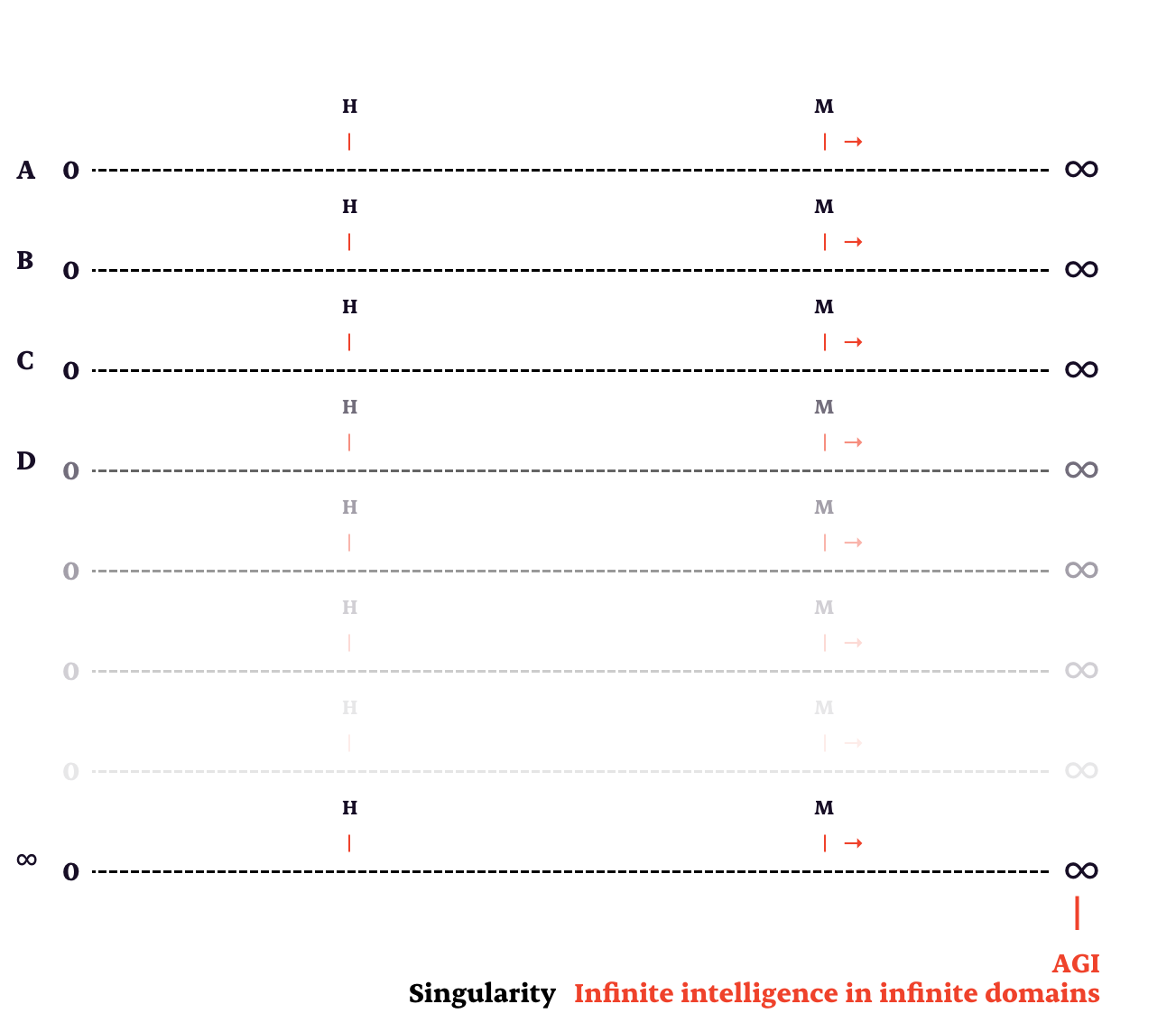

What would AGI need to achieve

Under this lens, we can position AGI in the bottom right, as holding infinite ability in infinite domains. But we established the fallacies of that view, so that leaves us with a much more current and useful alternative.

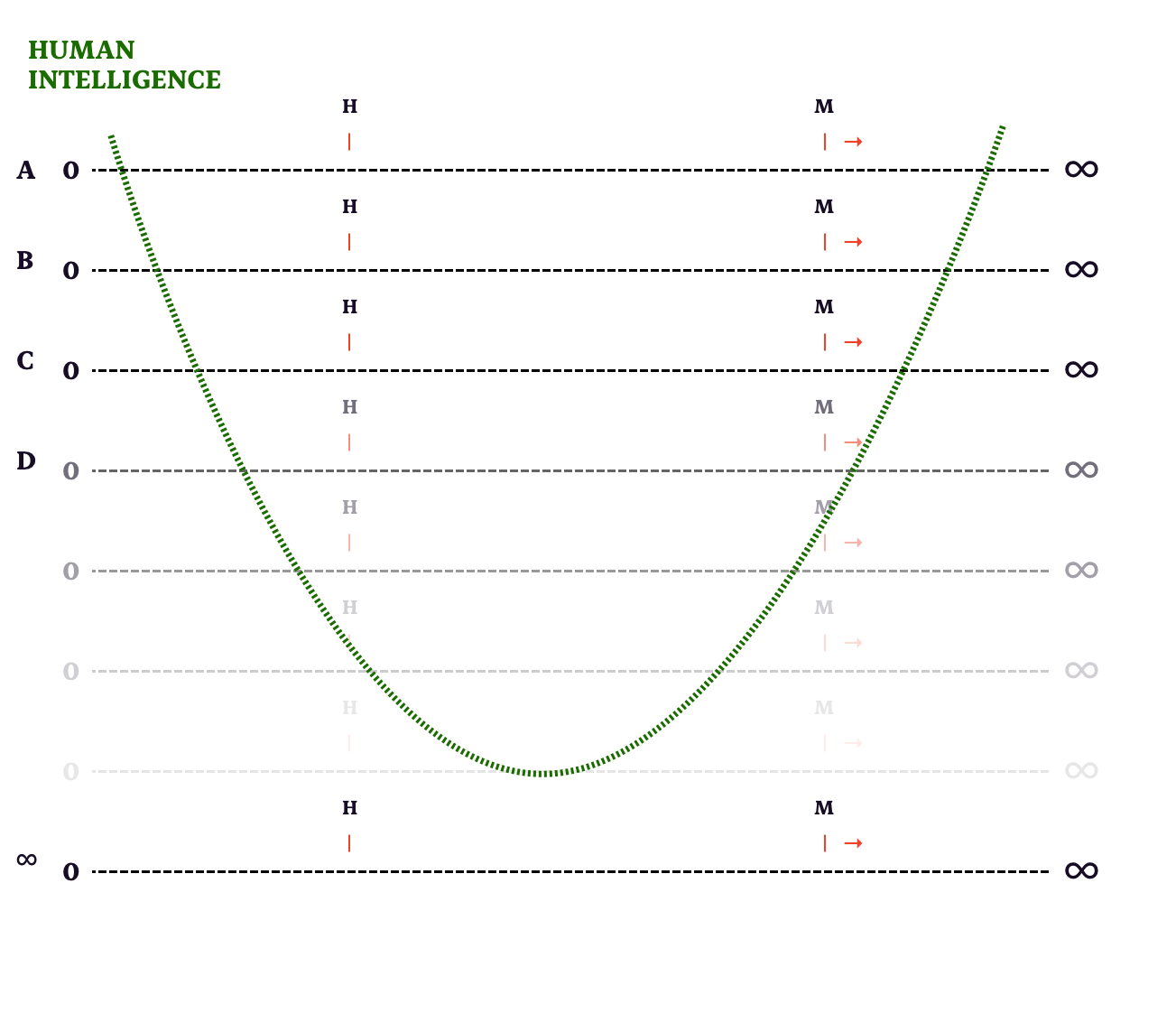

In the absence of AGI, is it up to us to navigate this bend? Crossing and linking disparate skills and disciplines. We hold an intellectual monopoly in that regard.

Machines are competent in their unique domains, but are blind to anything else; they only compute and extend the steps we relayed to the domain.

Another way of thinking about it is when we come up with a new skill or a technology, we might slowly improve it, maturing the domain for other humans to participate in it. As part of that onboarding, sets of instructions need to be written. At which point are the domains ready for a machine to excel in them?

That machine is domain-specific and holds no intelligence. Its algorithms could do a lot of things that we could never do. For example, untangle messy data or make assumptions about the future.

But the bend is uniquely creative and can’t be mechanically produced.

This is the core of it. If your product or service is narrow by design, then you’re open for a machine to excel, or replace you.

This is not limited to unskilled jobs, as the machine holds no interest in your prestige. It holds no interest at all. The system can perform tasks efficiently. And if you’re job is on a narrow domain, and can be broken into steps, then you’re not using your human advantage properly.

Our unique, innate ability as creative humans is a crucial aspect of our identity. It will reveal currents in automation, AI, and innovation opportunities.

Nitzan Hermon is a designer and researcher of AI, human-machine augmentation, and language. Through his writing, academic and industry work, he is writing a new, sober narrative in the collaboration between humans and machines.